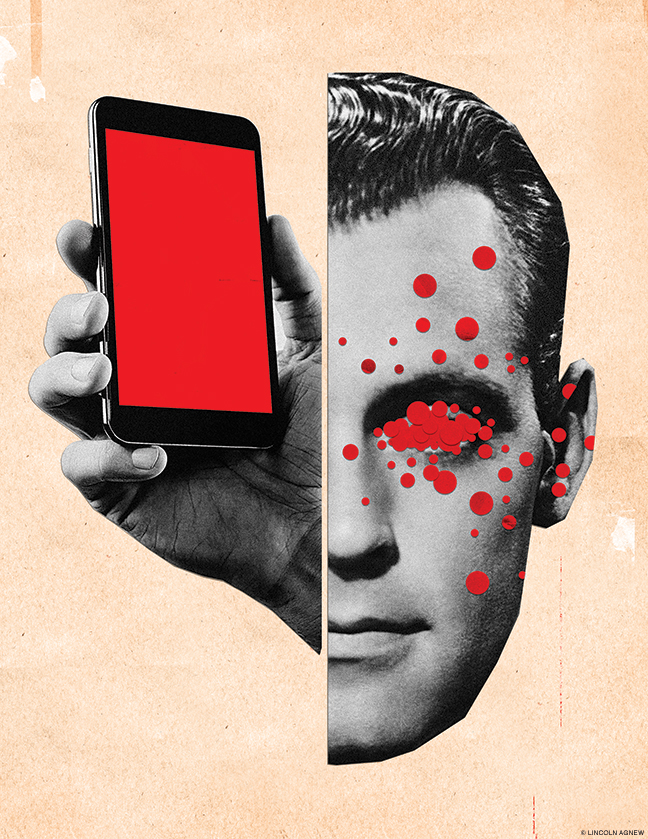

Fake news may be as old as news itself, but the viral deceptions mutating on the internet are affecting the institutions that inform our democracy. Some Penn scholars offer analysis, context, and concerns.

BY SAMUEL HUGHES | Illustration by Lincoln Agnew

SIDEBAR: Just the Facts, Please

Falsehood flies, and the Truth comes limping after it …

—Jonathan Swift in The Examiner, 1710.

World War III is a guerrilla information war with no

division between military and civilian participation.

—Marshall McLuhan, Culture Is Our Business, 1970.

The strange bacillus known as Pizzagate grew out of a Wikileaks dump. From that fecund trove of emails hacked from the Clinton campaign it spread, mutating like something out of The Hot Zone, deploying the algorithms of bots and the hidden agendas of sock puppets to infiltrate the body politic. Clues lurked in codes; it reportedly took “researchers” at 4chan and Reddit to grasp the darker meaning of certain key words and phrases. Pizza, for example. Since the emails came from the likes of Clinton campaign manager John Podesta, there had to be more to it than some family-friendly pizza joint in Washington called Comet Ping Pong; a closer investigation revealed that the initial letters ofcheese pizza were the same as those of … child prostitution! The dark code had been cracked, and the crackers were off to the races. Prostitution, child trafficking, ritual murder, rape—you name it—all came together in the (nonexistent) dungeons of Comet Ping Pong. Suddenly there was a massive outbreak of videos with titles like “BREAKING: Hillary Clinton Is A Satanic Child Abuser, Wikileaks Dump Suggests” and “Satanic Pizzagate Is Going Viral Worldwide (Elites Are Terrified).” Clinton, Podesta, and Comet Ping Pong owner James Alefantis were all ringleaders. (After all, Alefantis had once hosted fundraisers for Barack Obama and had posted a photo on his Instagram account of a little girl wearing a T-shirt whose Pizza Hut logo had been altered to Pizza Slut!)

Just what effect, if any, all this cyber ayahuasca had on the presidential election is impossible to know. But it certainly had real-world consequences, beginning with death threats and other phone harassment to Comet Ping Pong, which reportedly spent $70,000 in security upgrades. Soon the Pizzagate prion infected the mind of 28-year-old Edgar Welch, a North Carolina father of two, to the point where he grabbed an assault rifle and drove to Washington to investigate the evil goings-on. After pointing his gun at some Comet Ping Pong employees (who fled), he fired a few shots into a computer closet, then began searching for evidence of child sex abuse. Finding none, he surrendered to police. On March 24 he pleaded guilty to weapons and assault charges.

That same day Alex Jones, the flamboyant, conspiracy-minded host of “The Alex Jones Show” and founder of the InfoWars website, issued a lawyer-driven “apology” for his part in spreading the story—“to the extent our commentaries could be construed as negative statements about Mr. Alefantis or Comet Ping Pong.” He wasn’t the only one who did it, he said. And he did not apologize to Clinton or Podesta.

With the election over and a contrition ritual completed, the bacillus was weakened. Yet it lives on. The day after Jones’s statement, a small group of child-protecting warriors held a demonstration in Lafayette Square demanding that the government launch an investigation into Pizzagate—or, as the demonstration’s organizer now preferred to call it, Pedogate.

OK, so Pizzagate is an extreme example. It’s also just one strain of the phenomenon that’s been loosely labeled fake news, which at its core is as old as news itself. But with so many warp-speed ways to alter and spread images and storylines—and so much upheaval around the news organizations that have traditionally delivered them—the phenomenon has serious implications, not just for democracies and political candidates but for institutions that view themselves as grounded in the pursuit of fact-based truth.

“We’re again at a moment in American history, and in world history, where the institutions and the media systems that have been developed—they felt stable at the time, but they just were never all that stable,” says Vincent Price, Penn’s outgoing provost and the Steven H. Chaffee Professor of Communication and Political Science. When I ask, in a convoluted way, what the cure might be for what seems like a steady erosion of reliance on facts, he responds: “I don’t have a cure, but I’m not sure that it’s a disease. The metaphor matters here. It is a problem, and it is a challenge. But it’s not a new problem. Look at the press system to the extent that it existed at the time of this nation’s creation, which engaged in all kinds of scurrilous attacks, and what news there was, was not exactly faithful to fact.

“Our nation does represent a flowering of Enlightenment thought that is remarkable to this day,” he adds. “Our challenge is to figure out how they accomplished that. Somehow, they navigated through it.”

Three decades ago, when Price was starting his career as a political-communication scholar, “the primary complaint was with the centralization of our media system, its investment in objective journalism, and how that produced a kind of mainstreaming of understanding and knowledge and made it very difficult for fringe viewpoints to be entered into the conversation,” he recalls. “What people begged for then was exactly what we’re living with today: ‘We need to return to a party press system, and move away from objective journalism and so on.’”

He hints at a smile. “Well you know, sometimes you get what you wish for.”

“We’ve talked a lot on this program about ‘fake news,’ and I know it’s a term that you detest,” Brian Stelter was saying to his guest a couple of months ago. “What should we be calling made-up stories instead?”

The shiny-domed Stelter is the host of Reliable Sources, CNN’s Sunday morning news-media show, which examines, in its own words, “the story behind the story—how news and pop culture get made.” Facing him was Kathleen Hall Jamieson, director of the Annenberg Public Policy Center, calm and gimlet-eyed behind black-frame glasses.

CNN, as you may have heard, has come in for some harsh words from President Donald Trump W’68, who has accused it and other mainstream news organizations of purveying “fake news” and even branded the media—or most of it—as the “opposition party” and the “enemy of the people.” The network, like many journalistic outlets, has been hitting back, trying to understand the new rules of the game, even as it reinvents itself. Having a prominent scholar define the terms is a good place to start.

“I’d like to call it viral deception, and I’d like to use the VD acronym, because I’d like to associate it with venereal disease,” Jamieson responded. “We don’t want to get venereal disease. If you find someone who’s got it, you want to quarantine them and cure them. You don’t want to transmit it. By virtue of saying fake news, we ask the question: ‘Well, what is real news?’—and you invite people to label everything they disapprove of fake news. As a result, it’s not a useful concept.

“What are we really concerned about?” she added. “Deception. And deception of a certain sort that goes viral.”

In an interview a couple of weeks later, Jamieson tells me that she and others at FactCheck.org have been using the VD moniker since the early days of that organization, which she co-founded at the APPC in 2003.

She cites a strain that circulated in 2008 about then-presidential candidate John McCain, who was cyber-whispered to have “crashed five planes” during his days as a Navy fighter pilot, and in 1967 was supposedly responsible for a lethal accident on the USS Forrestal.

That story’s narrative structure was based on an anonymous person saying, “‘I know somebody who knows somebody who worked on the Forrestal where John McCain dropped his bombs in his plane early, and as a result people died,’” she explains. “All the details that would characterize a good narrative were there, so by the time you finished reading it, you’d entered into another reality—which is part of the way these things persuade. You’re not just laying down a claim. Your brain is laying down the traces of a coherent narrative structure that can assimilate to the candidate without your even accepting the narrative. So it’s very hard, once you process these things, to un-process it, to take it out of memory.”

That’s a very different phenomenon from Trump “attacking, as fake news, things in mainstream news to which he objected,” she adds. “At that point, when you say fake news, you now have a partisan cue attached to it. So are you using it as it is used by President Trump, or are you using it as it is being used in general? Then, when the Left started to say, ‘Well, what he is saying is fake news,’ they were making the same category error. Now we have a term that is meaningless.”

On March 10, the Bureau of Labor Statistics released the first jobs report of Trump’s tenure. The numbers were positive, as they had often been during the last year of the Obama administration. White House Press Secretary Sean Spicer was asked a pointed question: “In the past, the president has referred to particular jobs reports as ‘phony’ or ‘totally fiction.’ Does the president believe that this jobs report was accurate and a fair way to measure the economy?”

“I talked to the President prior to this, and he said to quote him very clearly,” Spicer replied. “‘They may have been phony in the past, but it’s very real now.’”

Michael Delli Carpini C’75 G’75, the communication professor who serves as dean of the Annenberg School, is talking about a phenomenon that he calls multiaxiality. Since most people now get their information from such a “wide variety of sources—some of them still controlled by traditional gatekeepers like journalists and media outlets, but many of them not—there is no one place where the public agenda and public dialogue is set,” he explains. “As a result, you get views that vary in their ideological point of view—and in their basis in fact. You’re literally just one mouse click away from the opposite of almost anything you hear, factual or not. That puts a real burden on people to be able to say, ‘Well, I trust this and I don’t trust this,’ especially in an environment where trust in traditional media is at an all-time low. That’s a Petri dish for fake news, or at least for ‘alternative facts,’ however you might want to define that.”

The problem is not so much that the news is fake, says Marwan Kraidy, the Anthony Shadid Chair in Global Media, Politics and Culture at the Annenberg School, and director of its Center for Advanced Research in Global Communication. “It’s that there are now new ways of building momentum for fake news to circulate and achieve the trappings of reality that are dangerous. And it’s not that fake news began happening now. It’s that now it’s happening in a much more sophisticated way.”

Kraidy is no stranger to viral deception, having seen it work its dark magic during the Arab Uprisings in 2013, and more recently in videos that claim to have been filmed in war-torn Aleppo but, on closer inspection, were clearly made in places like Beirut and Tripoli.

“Plato’s metaphor of the cave, where people mistake shadows for the real thing,” he adds, “is on steroids in the digital age.”

“We’ve had anonymous and pseudonymous sourcing of content before,” says Jamieson. “We haven’t had the capacity to deliver it and get it relayed as efficiently as it can now be delivered and relayed in the internet age. The difference is in the extent to which the reach can penetrate unexpected places, and be relayed and relayed and relayed far beyond the ability of the individual who created it to reach the targeted audience, because likeminded individuals keep sharing it.”

Two months ago, a story from a site called USPoln.com began circulating on some Facebook feeds. It claimed that Secretary of Health and Human Services Tom Price had, in a CNN town hall, told a cancer patient: “It’s better for our budget if cancer patients die more quickly.”

“Disgusting!” commented the Facebook friend who posted it. Of course, Price never said that. While Price did explain why he thinks Medicaid is flawed—and argued that the Republicans’ healthcare bill would have strengthened it—his remarks were otherwise uncontroversial. (Full story at FactCheck.org.) It turns out that a site called Politicops.com, part of the Newslo umbrella of websites, took a factually correct (albeit disapproving) account from RawStory.com—and added some made-up exchanges between Price and the cancer patient. Only if you happened to read USPoln.com’s “Contact Us” page would you find an acknowledgement that it’s a “News/Satire” site. In other words, The Onion, minus the intentional humor.

Shortly after the 2016 election, Delli Carpini wrote an article titled “The New Normal? Campaigns & Elections in the Contemporary Media Environment” that appeared in an online journal produced by Bournemouth University’s Centre for Politics and Media Research.

“While disputes over ‘the facts’ are common, Trump took this to a new level, demonstrating that a candidate can make statements that were verifiably false, be called out on these misstatements, and pay no political price for them,” Delli Carpini wrote. “His campaign shattered the already dissolving distinction between news and entertainment …”

Trump is “not an aberration,” he concluded. While the context of issues and personalities will obviously shape the selection of candidates, “the days of campaigns that are controlled by a stable set of political and media elites are over.”

“It almost doesn’t matter what the underlying reality is,” Delli Carpini says back in his office when I ask him about his use of another academic word, hyperreality. “What matters is the mediated version of it.”

After the White House and some media types publicly disagreed over the size of the (photographed) crowds at the Presidential Inauguration and whether or not it was raining, he adds, “the debate went from, ‘Well, let’s figure out what’s the truth here’ to ‘Now that we are having this debate, what are its political implications?’ And those ‘alternative facts’ act as facts in public discourse.”

To an extent, these phenomena have been “percolating and emerging and evolving over decades,” he adds. “But it has kind of hit a watershed moment.”

Deception, viral or otherwise, requires a believer.

“Our minds are organized, due to their structure, to manage coherence,” says Sudeep Bhatia, an assistant professor of psychology who has published numerous articles on subjects related to learning and cognitive processes.

In his drab Solomon Labs office, he starts off by drawing a Necker cube, the famous two-dimensional representation of a three-dimensional cube, and points out that there are two coherent interpretations of the cube’s direction. When I look at it, it’s slanting in one direction; after a while I can see it slanting in the other. But I can’t see it slanting both ways at the same time. Because of the interactions between the nodes of my brain’s neurons that represent the cube’s points, Bhatia explains, “the coherence property of those interactions” would cause me to perceive the other points as also fitting into that directional structure. When I switch perceptions, “all of them switch together.”

“This is a visual property, but the ideas of coherence also apply to high-level cognition,” he explains. Then he walks me through a few of the more relevant experimental findings over the years.

The first is confirmation bias, which “refers to a tendency in human decision-makers to interpret information in order to confirm rather than disconfirm prior beliefs.”

“Let’s say I want to know for sure where Obama was born. If my hypothesis is that he was born in Kenya, maybe I’ll Google ‘Was Obama born in Kenya?’ The kind of information I’ll get is the kind of information that confirms it. The confirmation bias takes on many different forms,” including the ways we actively search for information.

“In order for the brain to consolidate its memory [of information], it represents confirming information for your belief. So if you believe that Obama was born in Kenya, and I give you five pieces of evidence—four of them saying that he wasn’t born in Kenya and one saying that he was—five days later, you’ll remember the piece of evidence that supports your hypothesis rather than remembering the pieces that don’t.”

Then there’s the belief bias. “If I give you an argument— All men are mortal, Socrates is a man, therefore Socrates is mortal —your judgment of the argument’s validity depends on whether or not the conclusion is true. But if I say All men are mortal, Socrates is mortal, then Socrates is a man —that seems valid, but the argument itself is not valid. So if you ask people to judge the validity of the argument, they’ll use their belief about its conclusion to judge its validity, which is not right.”

Fake news “usually doesn’t have well-argued conclusions,” he notes, “but if the conclusions support people’s beliefs, they’ll take the news as being valid—or at least the arguments in the news as being valid.”

Bhatia’s current research has to do with “training models of human knowledge representation on news-media sources,” such as Fox News versus CNN, or Breitbart versus the Huffington Post.

“We can tell you, in a rigorous manner, how someone who’s in one case watching Breitbart or Fox News and in another case watching MSNBC, how those people would develop different ways of representing the world around them—specifically representing people in the world around them, whether it’s politicians, immigrants, African Americans, and so forth. We’re still gathering our data, but the hope is that you can quantitatively predict the structure of how people see other people in the world, based on how our models learn information about people in the world.”

“Everything we continue to learn about how human beings make decisions is that we are biased information-processors by nature,” says Delli Carpini. “The best work on this suggests that we have an emotional response well before our brains can kick in. As soon as we watch or hear or read something, we’re processing that information in a way that biases it in favor of the way we think about the world. That leads us to sometimes avoid information that doesn’t fit our needs, and sometimes to ignore or reinterpret information.”

By now most people have heard that a substantial number of the fake-news stories from last year’s presidential campaign were cooked up by Macedonian teenagers and other mercenaries looking to make some quick cash.

“I was kind of surprised to read stories that talked about how much money there is in this,” says Eugene Kiely, director of FactCheck.org, “because it seems like I’m wasting my time here trying to track down facts when I could just make ’em up and make a living.”

Motivations range from purely mercenary to zealously political, and all manner of in-between. Some websites specialize in lifting stories about political figures and, with just a few tweaks of a quote or context, changing them from negative to positive and vice versa, depending on the political leanings of their audience.

“A lot of these fake news stories will show up on one site, and then they’ll be repurposed and reused, sometimes verbatim, on other sites,” says Kiely. “What once was viral email amongst friends is now spread across these many different websites—and then it’s spread on Facebook and Twitter, which increases exposure even more.”

Sometimes the culprits aren’t even human, or at least humans aren’t always the ones pulling the trigger at the scene of the cyber-crime.

“Don’t underestimate bots,” wrote Samuel Woolley (project manager of PoliticalBots.org) and Phil Howard (professor at the University of Oxford and the University of Washington) in an article for Wired last May. “There are tens of millions of them on Twitter alone, and automated scripts generate 60 percent of traffic on the web at large.”

After tracking political bot activity around the world, they concluded that “automated campaign communications are a very real threat to our democracy.” They also warned that bots “could unduly influence the 2016 election.” (Oh well.)

“In past elections, politicians, government agencies, and advocacy groups have used bots to engage voters and spread messages,” Woolley and Howard wrote. “We’ve caught bots disseminating lies, attacking people, and poisoning conversations.”

For a campaign director facing a tight race, they noted, the decision boils down to: “If an army of bots can seed the web with negative information about the opposing candidate, why not unleash them? If you’re an activist hoping to get your message out to millions, why not have bots do it?”

An article titled “How to Hack an Election” in Bloomberg Businessweek chronicled how a hacker named Andrés Sepúlveda used Twitter bots to manipulate elections in Mexico and South America. (He is now serving a 10-year sentence in Colombia for cyber crimes committed during that country’s 2014 presidential election.)

Knowing that “accounts could be faked and social media trends fabricated, all relatively cheaply,” the authors explained, Sepúlveda “wrote a software program, now called Social Media Predator, to manage and direct a virtual army of fake Twitter accounts. The software let him quickly change names, profile pictures, and biographies to fit any need. Eventually, he discovered, he could manipulate the public debate as easily as moving pieces on a chessboard—or, as he puts it, ‘When I realized that people believe what the internet says more than reality, I discovered that I had the power to make people believe almost anything.’”

“The story about what happened during the last [US] election remains to be written,” says Marwan Kraidy. “We have snippets about [political operatives] having hundreds of data points about individuals that allow you to manipulate their tiniest desire and preferences to orient them toward your own goal. I think we’re just beginning to understand this.”

And the desire to manipulate takes on strange dimensions.

“Buzz whoring is perhaps the last living piece of the American Dream,” a man named David Seaman wrote in Dirty Little Secrets of Buzz: How to Attract Massive Attention to Your Business, Your Product, or Yourself .

Published way back in 2008, the book promised to teach readers “how controversy, scandal-mongering, and social networking can turn your message into a viral sensation.” What’s more, wrote Seaman: “A successful viral video or concept will take you along for the ride and not the other way around. At a certain point, it takes on a life of its own.”

Seaman would know. He was recently revealed to have been one of the driving cyber-forces behind Pizzagate.

Early last year, the Pew Research Center reported that 62 percent of Americans got some news from social media, with 44 percent using Facebook and another 9 percent getting it from Twitter. Those numbers likely rose during the heated presidential campaign.

In the “algorithmic culture of social media, everyone becomes the target and, as a result, the victim of their own self-created echo chamber,” says Kraidy. “Friends of mine who get their news from Facebook and Twitter tend to get news that they already believe in. They want to confirm their own biases, and the way the technology works is in exactly that direction.”

“It’s a well-known finding that people tend to trust their friends and relatives more than they trust complete strangers, all else being equal,” says Sudeep Bhatia. “So Facebook and Twitter, which have allowed friends to share information, have, by doing so, reduced relative trust in more established news outlets. There’s a lot more social information transmitted, and because we trust information from close others, you see it generating all sorts of bubble effects, right?”

The breakdown of trust in what is now often referred to as “mainstream media” has been dramatic. In 1956, the American National Election Study found that 66 percent of Americans (including 78 percent of Republicans and 64 percent of Democrats) thought newspapers were “fair,” while just 27 percent said they were unfair. For many reasons—a perception that journalists are increasingly urban and elitist, the rise of talk radio and highly opinionated pundits, the proliferation of alternative outlets—faith in the old Church of Walter Cronkite has given way to angry, suspicious factions of informational Gnostics. (Ironically, one recent study notes that while 75 percent of US adults “see the news media as performing its watchdog function,” roughly the same percentage think the news media are biased. Conservative Republicans are the most distrustful at 87 percent.)

“What’s changed is it’s now an information environment where, if you have a [political] bias, you have more outlets than ever before to feed that bias,” says Delli Carpini. Half a century ago, “if I wanted to get the news, I turned on one of the three channels, which were almost all identical, and maybe I read the newspapers in my community and a major news magazine. The information was never not biased, but they struggled to present it in a more rational, unbiased way. That’s gone, right?

“Part of it, though, is on the shoulders of traditional news media, because they oversold the ‘I’m not biased’ argument. There is no such thing as truly pure, objective reporting. But now that notion of bias is so embedded in people’s minds that they don’t trust even basic statements of fact anymore.”

In December, conservative talk-radio host Charlie Sykes lamented the transformation that he had seen and, at times, been part of: “Over time, we’d succeeded in delegitimizing the media altogether—all the normal guideposts were down, the referees discredited,” he wrote in The New York Times. “That left a void that we conservatives failed to fill … We destroyed our own immunity to fake news, while empowering the worst and most reckless voices on the right.”

Where this all leaves universities and other “custodians of knowledge,” to use Kathleen Hall Jamieson’s phrase, is worth pondering.

“The way a community talks to itself matters tremendously,” Vincent Price is saying. “Right now the systems we use to talk collectively—to debate, to carry out our politics, even to educate in the classroom—are undergoing tremendous change. Much of that is productive, but change usually produces, at first, not enlightenment but confusion. I tell students, who are sometimes intolerant of confusion, that they just have to get used to it, if they want to learn. Because the path from not knowing something to knowing something requires moments of confusion.

“Universities are, in large part, Enlightenment projects,” he adds. “We need to be more of a public voice—not to take sides, but as a major place where knowledge resides, and to share and translate that knowledge in ways that help the public discourse. It won’t be without pain and suffering along the way, and there will be instances where rumor-mongering takes hold, to the detriment of all of us. But we’ve lived through it before. It’s an inevitable product, the natural evolution, of democratic societies. And since universities—and journalists—are important voices whose legitimacy is directly or indirectly being challenged, we need to up our game to provide useful information that can be trusted.”

Price pauses for a moment, then smiles—a cheerful, Enlightenment-project smile.

“What a time to be an educator!” he says. “And what a great time to be a student of political communication.”

SIDEBAR

Just the Facts, Please

“We’re very careful about using the word lie,” says Kathleen Hall Jamieson, the Elizabeth Ware Packard Professor of Communication who serves as director of the Annenberg Public Policy Center. She’s referring to FactCheck.org, the APPC-based website she helped launch in 2003 with Brooks Jackson, its first director. “We’re an academic site. I’m not going to make the claim that someone lies unless I know they did it deliberately. And the number of instances in which you know someone did it deliberately is extraordinarily small.”

That kind of restraint sets FactCheck.org apart from some other fact-checking outfits in the US, many of which are part of news organizations. Nor does FactCheck.org indulge in gimmicks that claim to measure the degree of fabrication involved.

“Calling [a number of] ‘Pinocchios’ and using a Truth-O-Meter introduces a high level of subjectivity,” says Jamieson, who was dean of the Annenberg School from 1989 until 2003. “We don’t do it for precisely that reason. It’s always a judgment call.”

There is, of course, a downside to that restraint. “It makes us less quotable,” she admits. “Because it’s easier for a journalist to say, ‘That earned a Pants on Fire’ than it is [to write] paragraph after paragraph to say, ‘No, that is taken out of context.’”

Even fact-checkers have come under fire lately from certain quarters. Sometimes, of course, the shots come from those affronted by the fact that their veracity is being challenged, period.

“We’ve been attacked by the Right and Left,” says Eugene Kiely, FactCheck.org’s director since 2013. “During the 2012 campaign, the Obama administration put out a six-page press release that attacked us, without even having the courtesy of sending it to us first. When campaigns and candidates and elected officials don’t like what they’ve heard, they respond. Now, Donald Trump has taken it to a whole new level. Declaring that organizations like The New York Times and Washington Post are ‘fake news’—that’s never happened. We also hear it from a candidate’s supporters.” Kiely explains that a group of Annenberg students, drawn from a fellowship program, are instructed to forward charges of bias to him so he can respond.

“In general, the campaign that perceives that it’s being damaged by fact-checking is going to be the campaign that attacks the ideology or accuracy or both,” says Jamieson. “This year there was more fact-checking of Trump because there was more of Trump to fact-check. So there’s more likely to be a general attack against all fact-checking from the Republicans than from the Democrats.” But, she adds, Republicans “in the moderate part of the world, like [talk-show host] Charlie Sykes,” have voiced their respect for FactCheck.org.

Some political organizations and news sites have taken to lumping “all the fact-checkers, and the procedures that we use, together, as if we are all the same,” she notes. “So you’ll see articles that attack fact-checkers as liberal, and then they attack the Truth-O-Meter that is used by one of our colleague fact-checking sites, and the ‘Pinocchio’ that is used by another. Well, we don’t use either one. But we’re then lumped in with the broader attack.”

I get a better sense of why that sticks in her craw when she sends me a link to an article by one of those attackers, LibertyHeadlines.com. It’s titled “DISHONEST: How Fact-Checkers Trivialize and Undermine Truth-Seeking.” Even though the article grudgingly acknowledges that “FactCheck.org has not been brazenly partisan, despite being very much a creature of the mainstream media,” it spends five paragraphs attempting to smear the organization by association because of the perceived sins of an “affiliated” organization—the Annenberg Foundation—which “gained notoriety” on account of its “commentary related to Barack Obama’s professional ties to domestic terrorist Bill Ayers, with whom Obama ran the Chicago Annenberg Challenge from 1995 through 2001.” One wonders how many readers will remember the “not brazenly partisan” part of the analysis after that.

In addition to a lengthy post titled “How to Spot Fake News,” FactCheck.org and other fact-checking organizations are working with Facebook to weed out viral deceptions on that site.

“Facebook has created a list of stories they provide to us and other fact-checkers that have been flagged by readers as potentially suspicious,” explains Kiely. “We can rank them [by readership] and know that these are the ones that have gone viral and that we should be paying attention to. If we’re getting questions from our readers about a story on the Facebook list, and it’s getting a lot of attention on Facebook based on its ranking, then we’ll investigate and write it up [as] true or false.”

Two years ago Fact.Check.org expanded its mission to include a science-based focus, known as SciCheck. It focuses “exclusively on false and misleading scientific claims that are made by partisans to influence public policy.”

“In the science area, I think we’re dealing with a different kind of phenomenon,” says Jamieson. “I am more optimistic that we are going to overcome the biases in that area. They’re not manipulating us to get a specific electoral outcome, so we don’t have all of the polarizing factors that we have in an election. As a result, it may be easier for us to get people into a structure in which we sit down together to work through their patterns of inference to see if they’ll draw a different inference if we can slow down and be more analytic.” —S.H.