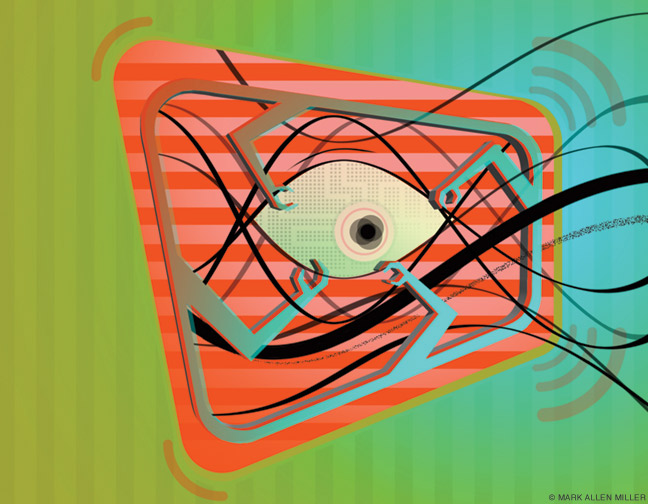

Can our devices learn about our state of mind from how we speak?

What can increasingly smart computers learn about you from your voice? A lot more than you might think, according to Penn communications professor Joseph Turow C’71 ASC’73 Gr’76. And when you get to know him, it’s not surprising to learn he’s written a book about it.

When Turow was a young boy in Brooklyn, his parents wanted him to be a lawyer, but he was fascinated by advertising. In high school he read every book he could find on the subject and even subscribed to Advertising Age magazine. When he got to Penn, he became an English major, figuring it would help him succeed as a copywriter. But the more he learned, the more critical of the industry he became.

A revelatory undergraduate class in media led him to enroll at the Annenberg School for master’s and doctoral degrees. There he was a research fellow for Robert Lewis Shayon, who in addition to teaching at Penn had a storied career as a radio producer and TV critic. Longtime Annenberg Dean George Gerbner became Turow’s PhD advisor.

Turow went on to teach at Purdue University for a decade before returning to Penn, where he’s remained ever since and is now the Robert Lewis Shayon Professor of Media Systems & Industries at Annenberg. In that role he’s still pursuing his interest in the advertising industry. Its digital transformation, he says, led him to write five books on the topic. He recently spoke about his newest one, The Voice Catchers: How Marketers Listen In to Exploit Your Feelings, Your Privacy, and Your Wallet (Yale University Press), with Gazette contributor Daniel Akst C’78.

Your book is about what can happen when companies digitize people’s voices and apply the power of computer learning to this data. Just what can companies tell from my voice?

I describe an emerging industry that is deploying immense resources and breakthrough technologies based on the idea that voice is biometric—a part of your body that those in the industry believe can be used to identify and evaluate you instantly and permanently. Most of the focus in voice profiling technology today is on emotion, sentiment, and personality. But experts tell me it is scientifically possible to tell the height of a person, the weight, the race, and even some diseases. There are actually companies now trying to assess, for example, whether you have Alzheimer’s based upon your voice.

So there are some upsides to advances in voice technology?

Absolutely. Diagnosis is one. Another is authentication—say when you call your bank and they use your voice print to confirm your identity. That’s fine.

But in the book you are mostly concerned with the problems that could arise when companies are able to harvest huge quantities of voice data.

The issue is that this new voice intelligence industry—run by companies you know, such as Amazon and Google, and some you don’t, such as NICE and Verint—is sweeping across society, yet there is little public discussion about the implications. The need for this conversation becomes especially urgent when we consider the long-term harms that could result if voice profiling and surveillance technologies are used not only for commercial marketing purposes, but also by political marketers and governments, to say nothing of hackers stealing data.

How pervasive is this technology?

There are hundreds of millions of smart speakers out there, and far more phones with assistants, listening to you and capturing your voice. Voice technology already permeates virtually every important area of personal interaction—as assistants on your phone and in your car, in smart speakers at home, in hotels, schools, even stores instead of salespeople.

Why do people bring these things into their homes?

They’re seduced by the novelty, convenience, charm, curiosity, and the quite low price of the smart speakers. My wife got an Amazon Echo as an office “potluck” Christmas gift. Couple that with the media coverage of the devices, which often makes it seem obvious that people have them and are oblivious to the voice-profiling possibilities.

And is this all about Google and others listening to what I say? Or is it about how I say it—that is, the tone and volume and pitch and so forth?

These devices listen to and respond to your words, of course, and make use of their meaning to do your bidding, and for certain marketing purposes. But my concern in the book is that the assistants are tied to advanced machine learning and deep neural-network programs that companies can use to analyze what individuals say and how they say it with the goals of discovering when and whether particular people are worth persuading, and then finding ways to persuade those who make the cut.

Amazon and Google have several patents centering around voice profiling that describe a rich future for the practice. And advertising executives told me they expect voice profiling will not too many years from now be used to differentially target people based on what they infer about them in real time from their voice. But consider the downside: we could be denied loans, have to pay much more for insurance, or be turned away from jobs, all on the basis of physiological characteristics and linguistic patterns that may not reflect what marketers believe they reflect.

You see a lot of this as potentially right around the bend, but what’s going on now?

Amazon’s Halo wrist band already contends it can tell you how you sound emotionally to your boss, mother, spouse, and others. It’s the company’s first public proof of concept. But Amazon and Google are not yet using the maximum marketing potential of these tools, evidently because they are worried about inflaming social fears. Contact centers, which are out of the public eye, point toward the future. If you call an 800 number and you’re angry or worried or happy when you speak to the interactive voice response (IVR) or to a live person, there’s a decent chance a computer in the background will draw conclusions about your state of mind while it looks at the history of your purchases to determine how important of a customer you are. If it deems you are substantially worried or angry and important, it might instruct the agent to give you a substantial discount to make you feel better. Or the IVR might triage you to a human agent who is good at speaking to people with those emotions, and especially good at “upselling” them—convincing them to buy more than they intended.

Isn’t the horse out of the barn on digital privacy? Haven’t we long ago handed over all kinds of information about ourselves without any control of how it’s used?

National surveys I’ve conducted with colleagues found that about 60 percent say they would love to control that information—and they try now and again—but they generally have concluded they won’t succeed. So a majority of Americans are resigned to companies exploiting their data, often even giving their consent, because they want or even need the services that media firms and marketers offer. The question is, will the same pattern happen with voice profiling?

So what can we do to protect ourselves from the growing power of the voice intelligence industry?

The first thing to realize is that voice assistants are not our friends no matter how friendly they sound. I argue, in fact, that voice profiling marks a red line for society that shouldn’t be crossed. If the line is crossed, voice profiling will be just the beginning. Think of what marketers can learn from studying your face as you walk around your home or your block … or how wrist-based sleep devices can lead to algorithmic evaluations of your psychological state.

When it comes to understanding the implications of a company’s creation and use of your voice print, there can be no such thing as informed consent—people don’t know what conclusions about their minds they are consenting to, or what the implications might be. That’s why I suggest in the book that regulators should ban companies from using voice profiling as part of their marketing activities. Voice-based authentication is fine, for the most part, but to allow companies to use your voice to categorize you for the purpose of selling things, ideas, or political candidates—that’s beyond the pale.